BMO-Boy!

One of my summer projects was to build a BMO robot, with help of a 3D printer and a custom software stack developed on my own. Today, I’m presenting my own customised BMO-Boy!

This project is based on Orbian’s BMO-Boy, with a few differences here and there.

I basically ditched the robotics and instead opted into making a somewhat complicated software stack for powering BMO, since I wanted to play with voice recognition and use somewhat complex libraries. Along the way, I also realized I could easily implement this in Rust, a language which I also wanted to tinker with this summer.

The result is this!

Hardware details

My own BMO-Boy is using the following hardware parts, which differ a bit from Orbian’s design:

- Instead of Adafruit’s 3.5” TFT screen, I used a suspiciously similar one found on Aliexpress, which only differs on the controller board used. Its only drawback is that its mounting holes are extremely small for the 3d model screw holes, so it will be crammed inside BMO without being mounted (Not that it will have much space!)

- I used a Raspberry Pi Zero W, and a ReSpeaker 2-Mics pHAT for audio input and output. Nothing else.

- In order to save a little more money, I didn’t use any batteries. I opted instead to just plugging the Pi Zero to a charger, and the screen to a DC charger.

- I bought a GPIO header extender in order to make space between the HAT and the Pi Zero for the RCA cables, which have to be soldered into the Pi Zero in order to get video output to the screen. I used solderless dupont-to-female RCA connector like this to simplify things.

- I used m2.5 brass inserts and screws for fixing the Pi Zero and its HAT to the backplate, and also for fixing the backplate to the main body (since I only had m2.5 screws, I had to drill through its holes)

The HAT’s purpose will be picking up and streaming audio into a (hopefully) more powerful computer.

As for the 3D print, I simply printed Orbian’s model and painted it with Tamiya’s TS41 spray paint, which was the closest color to BMO’s I could get my hands onto. Orbian has a handy guide for its building on youtube, though the only relevant part here would be painting it.

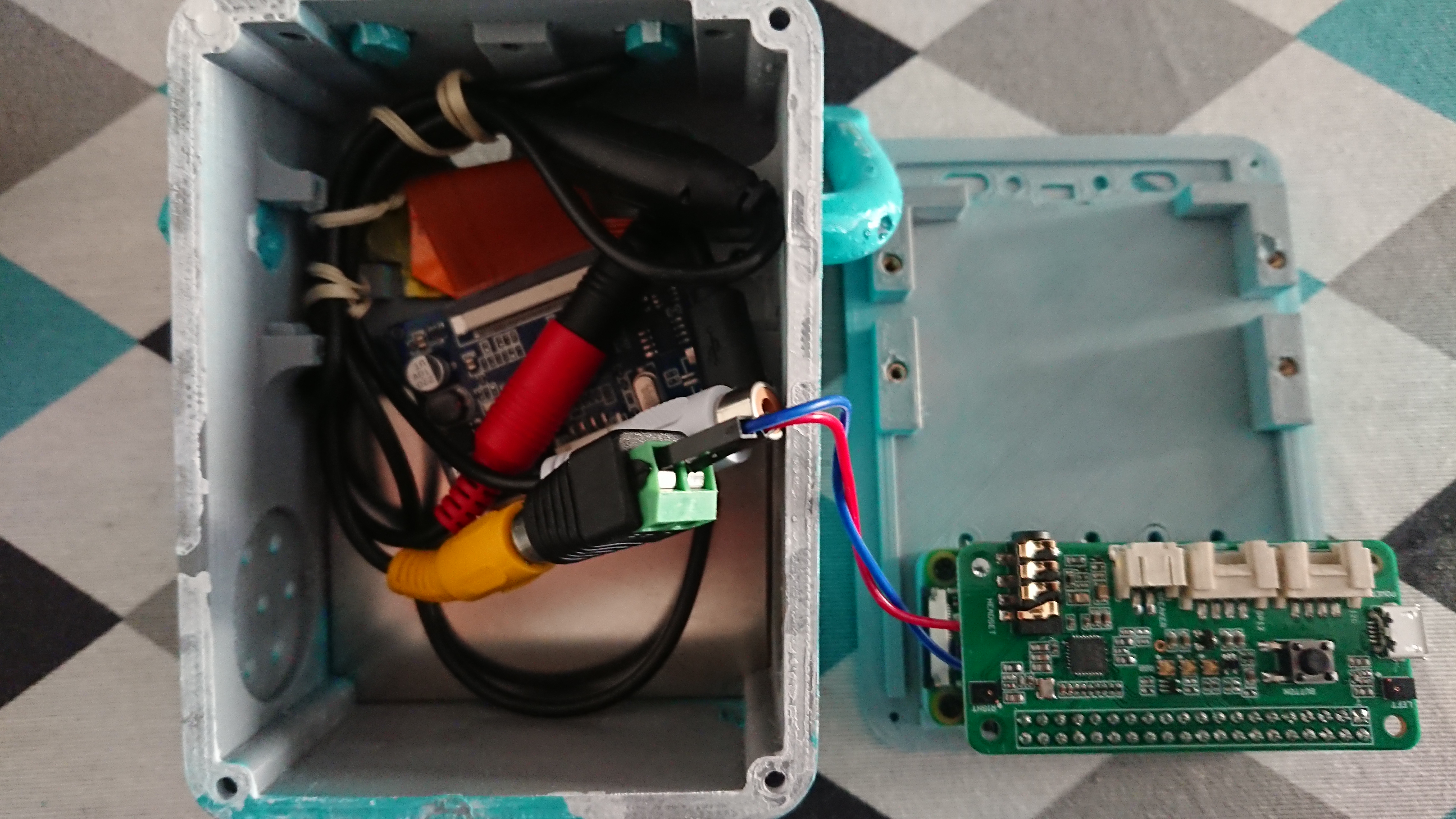

The components barely fit inside, so maybe another 3D model could be better. Other contenders would be this one, which I attempted to print but didn’t have enough precision for the screws, and this improved version which I haven’t tried.

It is rather tight in there…

It is rather tight in there…

Software details

The software running BMO consists of three parts:

- voice2json, which is an amazing software which turns audio input into recognized (and configurable) intents with a confidence value associated to them, by making use of trained ML Speech Recognition models.

- voice2json will be in charge of parsing the audio input picked up by the raspberry’s HAT into intents.

- As its name indicates, the output will be in form of JSON strings, so we will need a parser for it, which will be the client!

- A simple client, which is in charge of:

- Listening for JSON input from stdin and parsing it into intents.

- Sending intents with enough confidence to the server.

- A

somewhat misleadingserver, which is in charge of:- Displaying BMO’s face to the screen, and playing audio tracks.

- Listening over the network for intents, which dictate which faces and audio tracks should be played.

Both voice2json and the client will be running on a proper computer, and the server will be running on the Pi Zero. The reason for this is that the Pi Zero is not exactly really powerful, and cramming both machine learning, JSON parsing and a “game” into a 1-core ARM CPU is not really a good idea.

The client and server are both written in Rust. While the client is somewhat simple, the server makes use of more complex libraries such as SDL (for displaying graphics), and Soloud (for playing audio). It also makes extensive use of Rust’s amazing concurrency capabilities, which was a great learning experience!

Functionalities

BMO’s functionalities are the following:

- It can recognise an arbitrary number of speech expressions, as long as they are associated to simple intents (

anger,surprise,sing_a_song,say_hello…) - It can match an arbitrary number of faces and (optionally) audio tracks to each intent. The result is that BMO can answer to intents with:

- A randomised static face image among those associated with the intent, with a configurable time limit.

- A series of (also randomised) faces switching at fixed intervals while an audio track plays in the background (In other words, BMO can speak anything in any way you want!)

- An associated function. Currently, BMO can:

- Display the current weather. (This can be opted-out from, since it requires an API key from openweathermap)

- Configure (by voice!) and display a chronometer, with a configurable alarm playing at the end.

The setup

All software is intended to run on Linux (voice2json included).

-

On the Pi Zero’s side, any Linux distribution should work, as long as it has the SDL2, SDL2-image, SDL2-ttf and openAL libraries required to run the server. I used a headless Raspbian image with no DE installed, which I controlled via SSH. bmOS_server should automatically pick up the display and show itself in fullscreen mode.

-

On the “auxiliary” host’s side, again any distribution should work, as long as it can run voice2json. I used Arch Linux alongside a Docker image for voice2json. There are native voice2json packages for Debian available, too.

-

In order to pick up and stream audio from the Pi zero’s microphones, an external solution should be found for this. I simply used a bash script to record and transmit audio via SSH, from the host which is running the client. The script is detailed in the client’s documentation, but any other solution can be used as long as it’s accepted by voice2json.

-

In order to get audio from the raspberry and hear BMO’s voice, the 3.5mm jack in the HAT can be used, but it adds yet another cable to manage. I chose to simply stream it too.

Source code

-

Everything is open source, so anyone can play with it. I doubt anyone will want to make use of this since it is quite a simplistic and specific project, but who knows!

-

The source code for the client is hosted on Github and crates.io, with its more in-depth documentation hosted on docs.rs

-

The source code for the server is hosted also on Github and crates.io, with its documentation hosted on docs.rs

-

voice2json, synesthesiam’s project, is hosted here, with its documentation hosted in its main webpage.

Assets

Since all assets should be for personal use, I didn’t include them in the source code in order to avoid any potential problems. I simply ripped audio tracks from the show, and used Orbian’s face assets hosted here, along with a few of my own, so that there could be more variety when talking. Nonetheless, feel free to send me an email at bmo@samueldgv.com if you want my assets.